I want to run any number of Android UI tests on each PR. Existing solutions. Part III

Hello everyone!

I will continue my series of articles on selecting the appropriate infrastructure for running UI tests on each PR. The next three articles will delve into a thorough analysis of each potential solution, culminating in a final comparison.

In this article, I will discuss the most popular solutions for running UI tests on Android: BrowserStack and Firebase Test Lab. The fourth article will focus on less popular but still familiar solutions for developers, such as SauceLabs, AWS Device Farm, LambdaTest, and Perfecto Mobile. The last article will discuss The Young and the Restless solutions: emulator.wtf and Marathon Cloud.

Let’s go!

Photo by James Mills on Unsplash

[DISCLAIMER]

At the outset, I must say that I hold the position of Co-Founder at MarathonLabs, the company whose solution (Marathon Cloud) is being discussed in this series. While I have attempted to remain impartial, I acknowledge that some of my views may be biased. Therefore, I would appreciate any feedback to ensure that this research remains objective.

Also, I must clarify that this study does not aim to provide an exhaustive evaluation of the products in question. Rather, it is based on my brief interactions with each one and reflects my personal opinions. Assessing factors like stability and scalability can be difficult when working with trial versions of solutions, as there may be insufficient data or time to arrive at definitive conclusions. Therefore, some of my conclusions may be subjective or inaccurate. Nonetheless, I trust that these articles will serve as a useful guide in navigating the complex landscape of UI Testing Infrastructure.

BrowserStack

Supported platforms

BrowserStack offers a wide range of framework support for mobile, web, and cross-platform solutions.

Interface

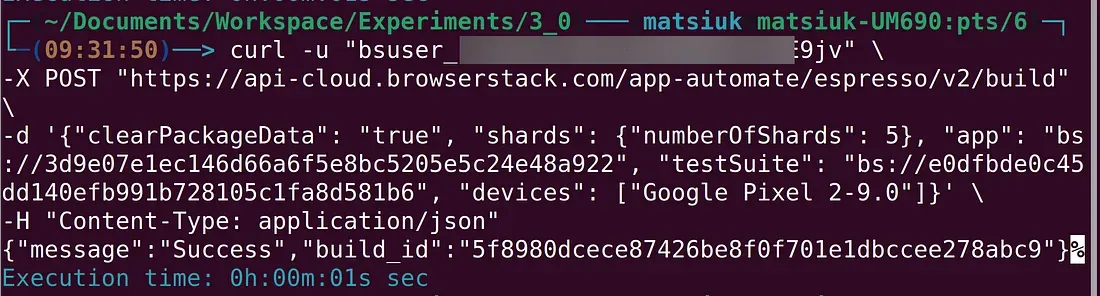

Installing and using the CLI tool provided by BrowserStack is relatively simple. The documentation is clear and easy to follow. You can start by familiarizing yourself with the instructions for using Espresso, which can be found in this documentation chapter.

When using BrowserStack CLI, developers need to utilize distinct commands for uploading apks and running the test suite, and there is no runtime involved.

You'll need to access the BrowserStack UI to check on the progress of your tests. Additionally, all configurations are delivered via CLI parameters—there are no configuration files involved.

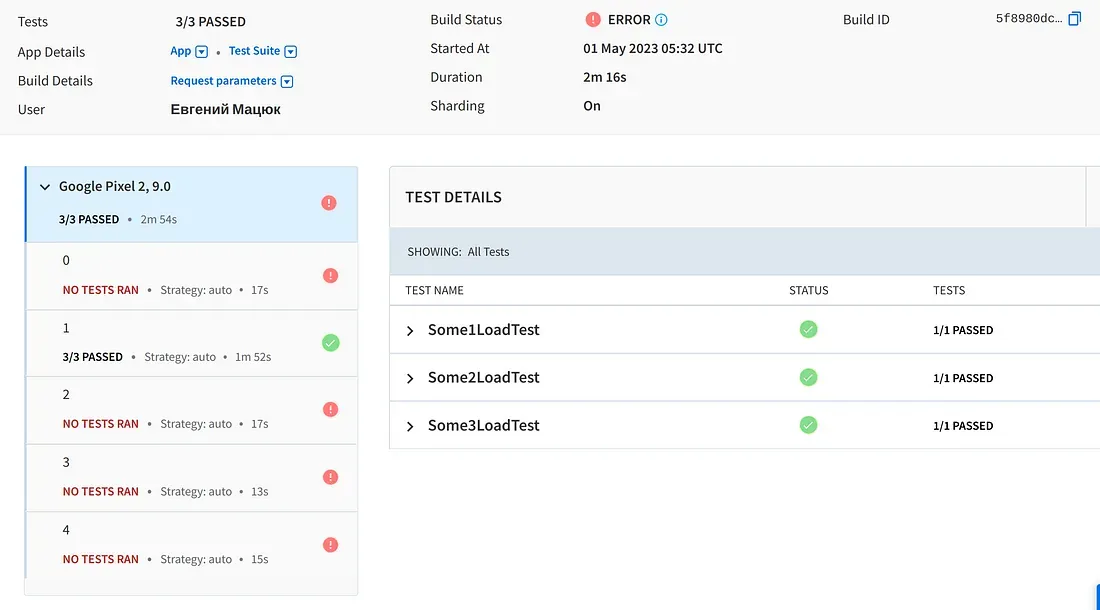

Stability

It’s disappointing that BrowserStack lacks a retry mechanism. Additionally, BrowserStack exclusively features real devices, without any emulators, which can negatively impact stability.

I’ve encountered issues with tests that are sometimes flaky (only 1–2 out of 50), causing the entire test suite to fail due to a lack of retries. Another frustrating aspect is that if I use more shards than tests, the test suite also fails.

It is possible to apply “clearPackageData” here.

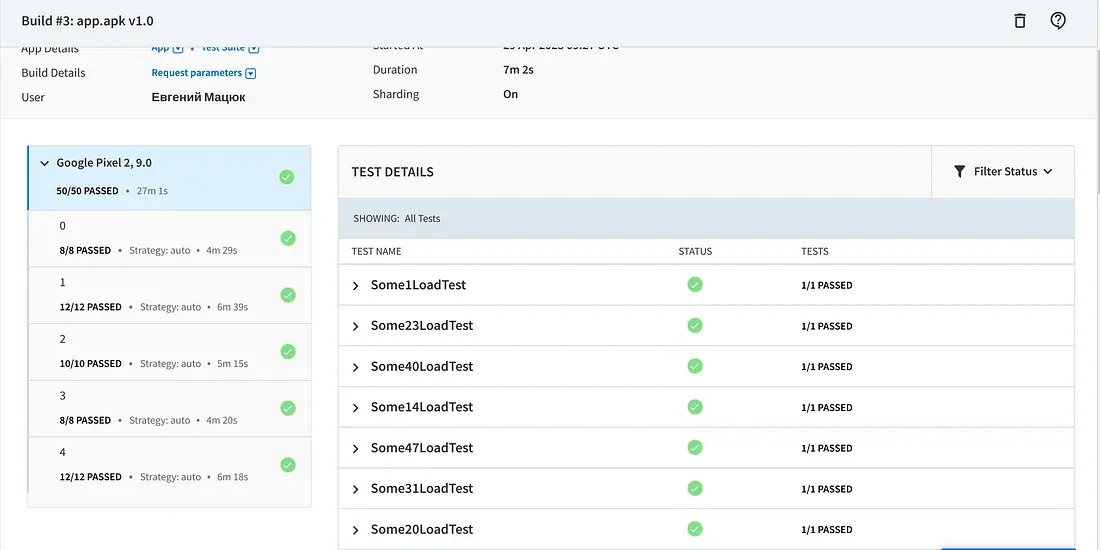

Time and Scalability

To parallelize test suite execution, the user can select the number of shards and the sharding strategy. The documentation provides all the necessary information.

One of the most intriguing aspects of this study involves conducting actual experiments and measuring time. To facilitate the runs, I have prepared two unique samples:

- “50_0” sample that comprises 50 UI tests, and each test takes approximately 30 seconds to complete. Moreover, all of these tests are reliable and not prone to errors.

- The “50_15” sample includes 50 UI tests with the same duration. However, it’s worth noting that 15% of these tests are considered flaky. I utilized a simple random function to ensure that these tests fail with a 15% probability.

I attempted to apply the following configuration everywhere for a test run:

- use-orchestrator = true

- clear-package-data = true

- Android API = 29

- number of parallels = 5 or 10

The numbers presented here, as well as for other products, are obtained only after three runs. It’s important to note that the number of runs is limited by free tiers in most products.

When using BrowserStack in trial mode, up to 5 parallels can be utilized. I will now share time measurements conducted under the conditions above:

- Upload time of app.apk = 00:00:08

- Upload time of appTest.apk = 00:00:04

- Run of 50_0 suite (5 parallels) = 00:07:02

Reports

BrowserStack offers reports that display dashboards allowing users to view the results of their shards and test classes.

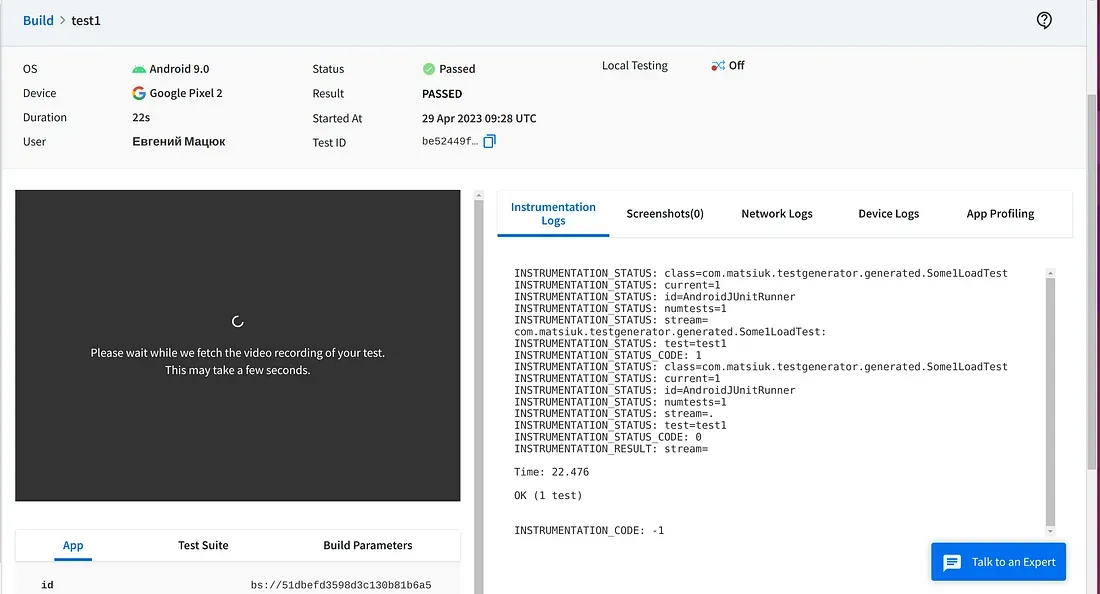

Each test’s details include all the necessary data required for further research, such as stack traces, videos of each test, various logs (including network logs), and more:

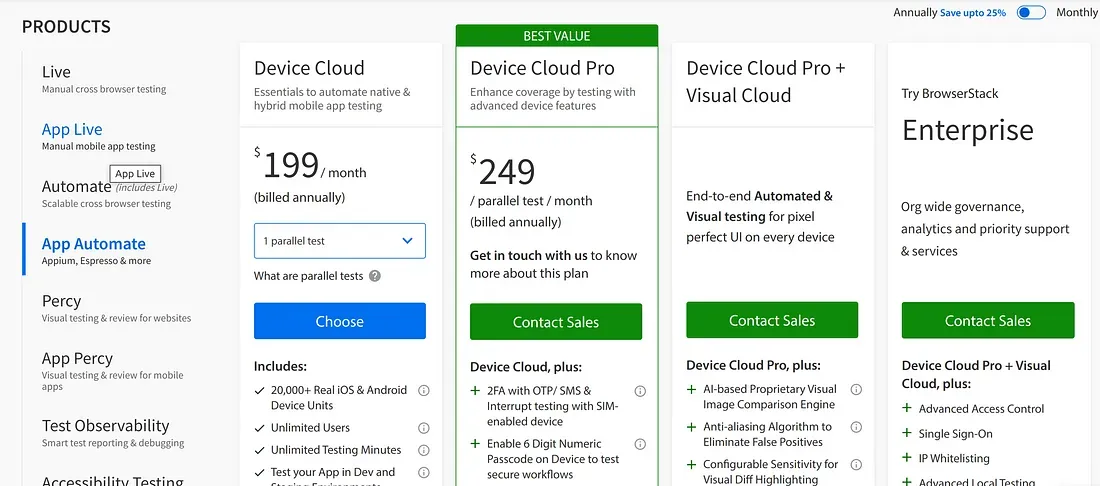

Cost

At BrowserStack, developers rent a set number of devices on a monthly or yearly basis for their testing needs:

As we previously discussed, this pricing model falls under the category of “Pay for a device per month.” However, when it comes to running tests on every Pull Request, this approach proves to be significantly more expensive as compared to the alternatives available under the “Pay for spent hours/minutes” category.

Security

The topic of security is extensive and warrants a dedicated article. I briefly discussed it in my previous article, but allow me to provide further information. As a user, I anticipate solutions to offer the following minimum requirements:

- All communication is done through TLS.

- All artifacts can be securely stored with a defined and manageable time to live (TTL).

- After every testing session, all devices (including emulators and real ones) are cleared to ensure that any app-specific data is immediately removed. This solution provides a guarantee of data privacy and security.

- Solutions offer the option to establish a proxy or IPSec connection, which ensures a secure connection between the user’s network and our network.

- Solution allows for the definition of different roles for individuals within your organization.

Most infrastructures address the first, second, and fourth points mentioned earlier, but the third point remains a challenge for many farms using real devices. Ensuring the secure erasure of data on these devices can be a complex and demanding task that requires ongoing support and maintenance. As for the fifth point, I haven’t had the opportunity to evaluate it thoroughly since trial modes often lack this functionality, especially for individual users testing the system.

Allow me to avoid elaborating on this requirement for each solution unless there are any unforeseen drawbacks or exceptional attributes that need to be addressed.

Support

Based on the limited number of interactions with the product, it is difficult to draw any definitive conclusions. Therefore, I will omit this requirement for the majority of infrastructures.

Firebase Test Lab

Supported platforms

Firebase Test Lab (FTL) is focused solely on mobile testing, specifically for Android and iOS platforms.

Please note that running Appium tests is not possible in this FTL.

Interface

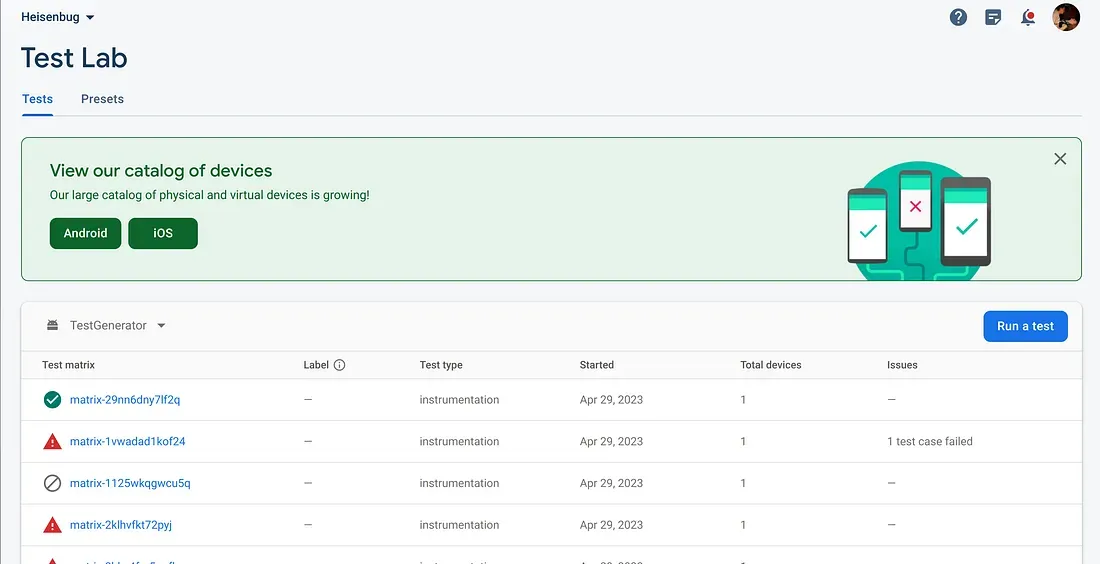

Users can utilize both the CLI tool and UI Dashboard.

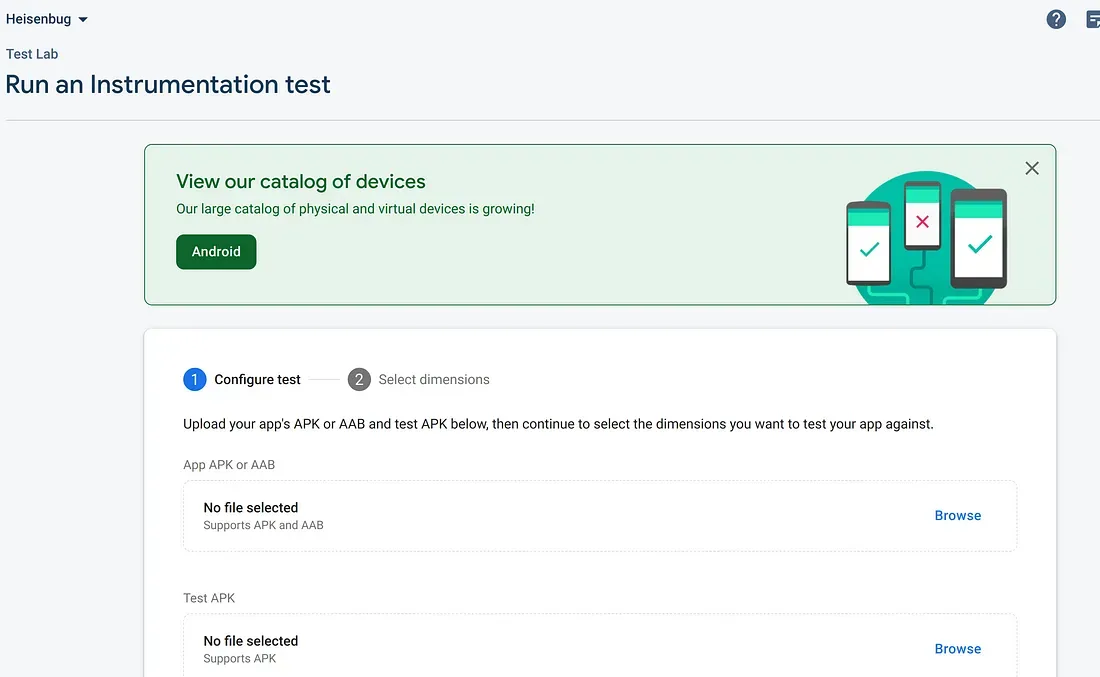

Have a look at the Dashboard:

To conduct a test run, simply click on the “Run a test” button located on the right side of the screen. From there, select the “Instrumentation” option to access the new screen and prepare for the test:

When uploading apks, users can adjust the test timeout, choose whether or not to use the Android Test Orchestrator, and select the number of shards. Unfortunately, the Dashboard does not allow for more extensive configuration customization. However, if you require further customization, you can use the CLI.

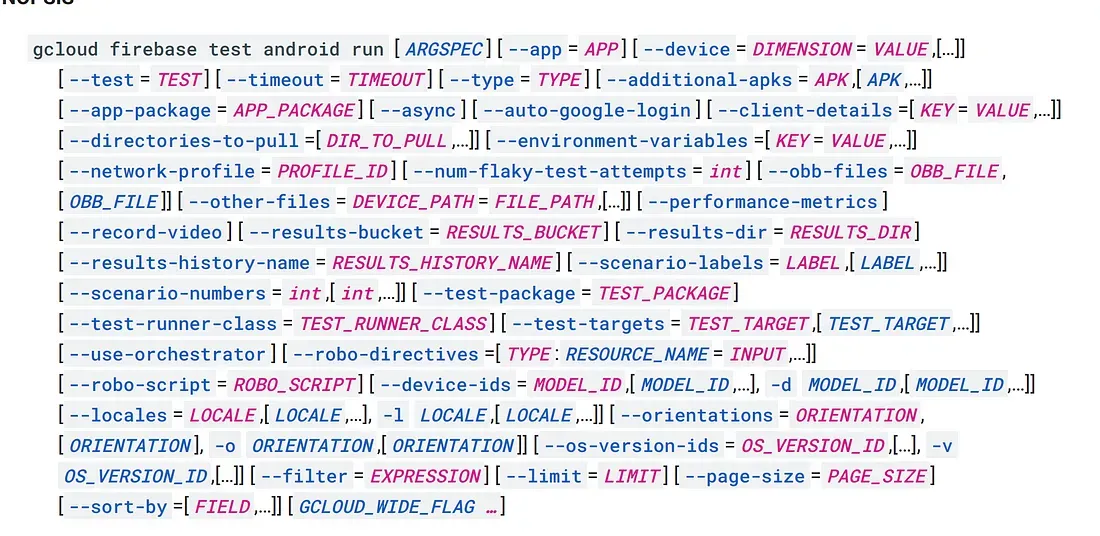

The CLI for FTL is not a tool that developers can easily download and use right away. To begin with, FTL does not have its own CLI. Instead, any console interactions with FTL are processed through the GCloud CLI. Setting up GCloud and connecting it with FTL is a separate challenge. For those who are interested in this procedure, I have provided a detailed description in an additional paragraph of this article.

The CLI enables flexibility in configuration.

But sharding, for example, is not available in CLI (why???).

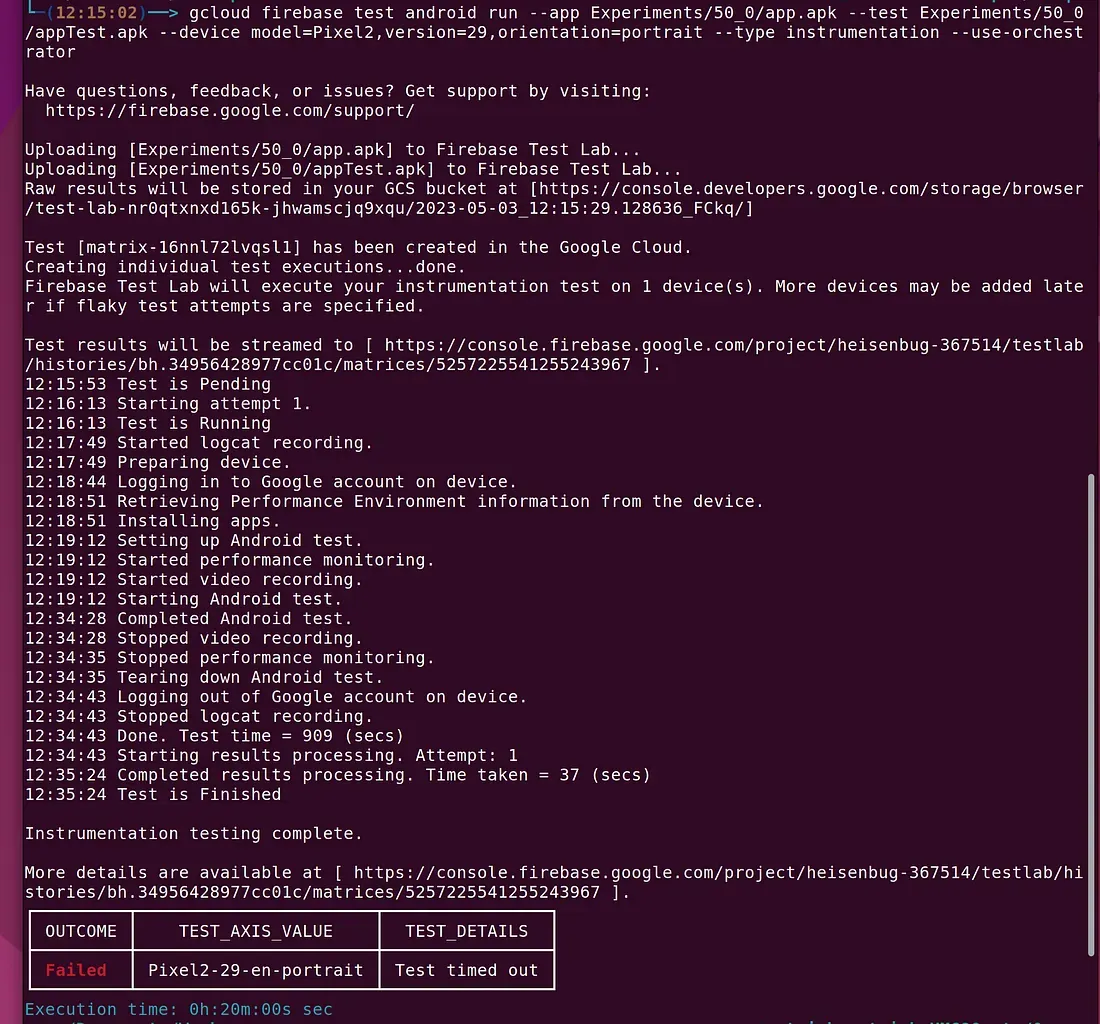

It seems that only the CLI is capable of supporting the runtime to some extent:

Stability

The inclusion of emulators, support of “clear package data,” and “use orchestration” options have undoubtedly enhanced the overall stability of the infrastructure. In addition, the CLI, not the dashboard, provides a useful feature called “ — num-flaky-test-attempts” that appears to function as a retry mechanism, which is exactly what we need. However, it does have two significant drawbacks:

- Instead of running only the failed test, the entire test suite is rerun.

- If even after all the reruns, all tests pass, the build will still be marked as failed.

In terms of overall stability, after using the solution for a short period of time, I have noticed a decrease in the number of flakes in the infrastructure compared to previous solutions. However, the experiment was conducted for a brief period, so it is difficult to draw any definitive conclusions.

Time and Scalability

As previously stated, the number of shards can only be set through the Dashboard and not via CLI. This process seems unusual and inconvenient, which has led to the development of wrappers like Flank to parallelize test runs. Flank offers a widely-used solution and allows for easy configuration through a unified file. Additionally, Flank can shard tests using previous run data, but it requires the user to keep track of the necessary file in Google Cloud Storage.

Unfortunately, the highly anticipated retry mechanism has not been introduced in Flank.

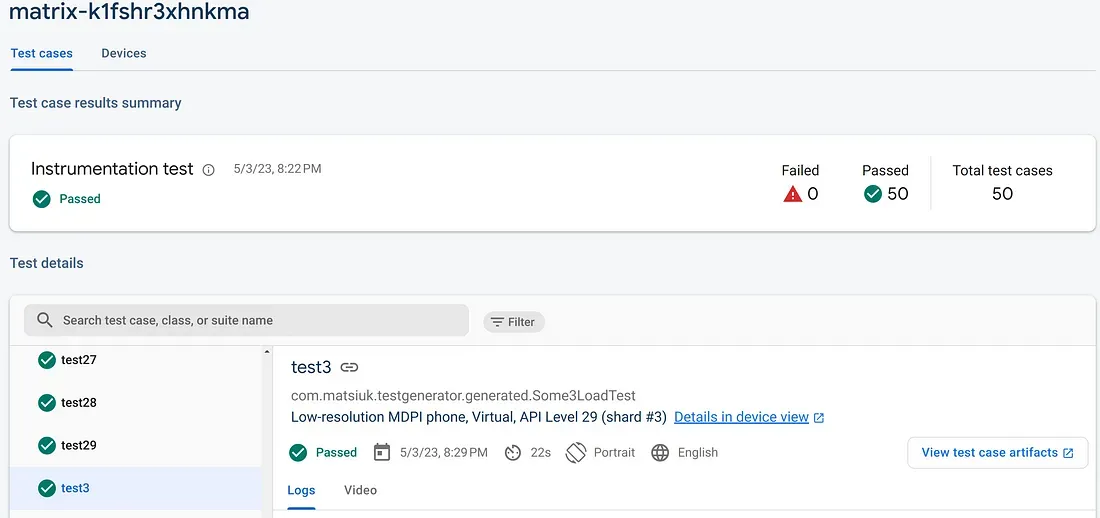

I would like to share the results of my sample test suite (50_0 suite) execution with FTL + Flank, along with the use of config parameters to enable smart sharding. The used emulator architecture is x86. Here are the findings.

10 parallels:

- Preparation = 00:00:04

- Scheduling tests: 00:00:07

Executing matrices: 00:06:05 - Generating reports: 00:00:05

- => Total run duration: 00:06:25

5 parallels:

- Preparation = 00:00:05

- Scheduling tests: 00:00:07

- Executing matrices: 00:07:54

- Generating reports: 00:00:06

- => Total run duration: 00:08:15

To be frank, the speed is not great. Recently, FTL has rolled out new arm-based emulators that are still in beta. Curious, I decided to test them out and the results are as follows.

[outdated info] 10 parallels:

- Preparation = 00:00:05

- Scheduling tests: 00:00:09

- Executing matrices: 00:03:14

- Generating reports: 00:00:06

- => Total run duration: 00:03:39

[The actual info is as of June 16, 2023] 10 parallels:

- Preparation = 00:00:07

- Scheduling tests: 00:00:15

- Executing matrices: 00:03:29

- Generating reports: 00:00:11

- => Total run duration: 00:04:07

5 parallels:

- Preparation = 00:00:05

- Scheduling tests: 00:00:10

- Executing matrices: 00:05:00

- Generating reports: 00:00:07

- => Total run duration: 00:05:26

I am impressed with this feature’s potential. I am hopeful that it will soon exit the beta stage.

Reports

The reports in FTL are similar to those of other solutions, but there is no overall runtime time and no video for each test. However, there are videos available from the device.

Cost

The FTL solution falls under the category of payment based on hours or minutes used by the user. The cost is $1 per hour for every virtual device, which may seem appealing. However, it is important to note the different quotas set by Google Cloud, which are outlined in the documentation.

Summary

In this article, I have explored the top solutions for running UI tests in the cloud. For occasional runs, BrowserStack and Firebase TestLab are adequate options. However, when it comes to running UI tests for every PR, other requirements need to be considered. It is clear that neither of these solutions is ideal for this scenario.

Please wait for the upcoming articles. Stay tuned.

Additional paragraphs

How to set up Firebase Test Lab

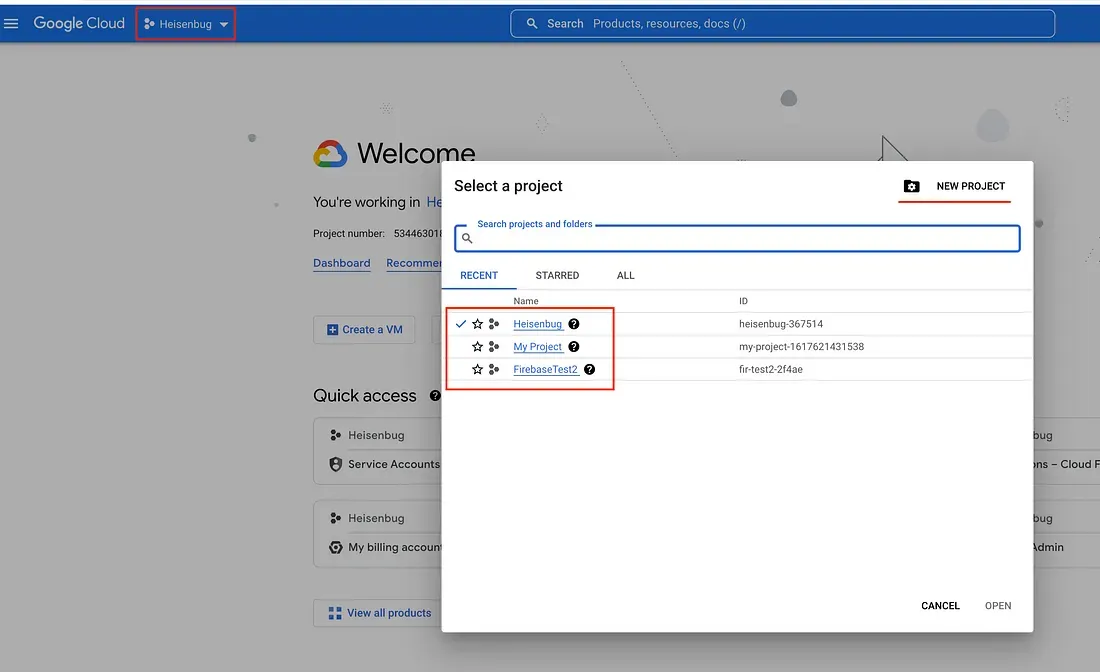

Firstly, create an account at Google Cloud:

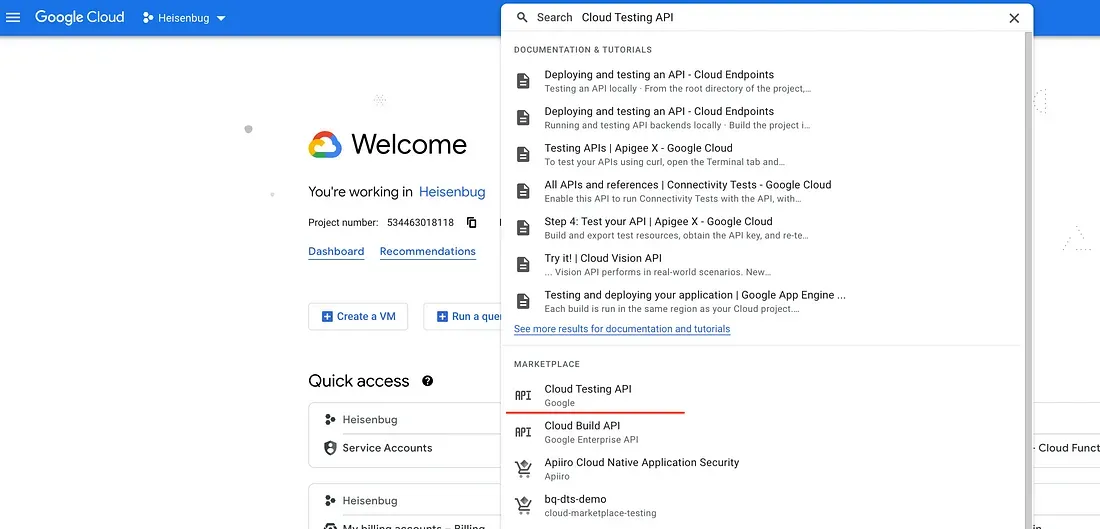

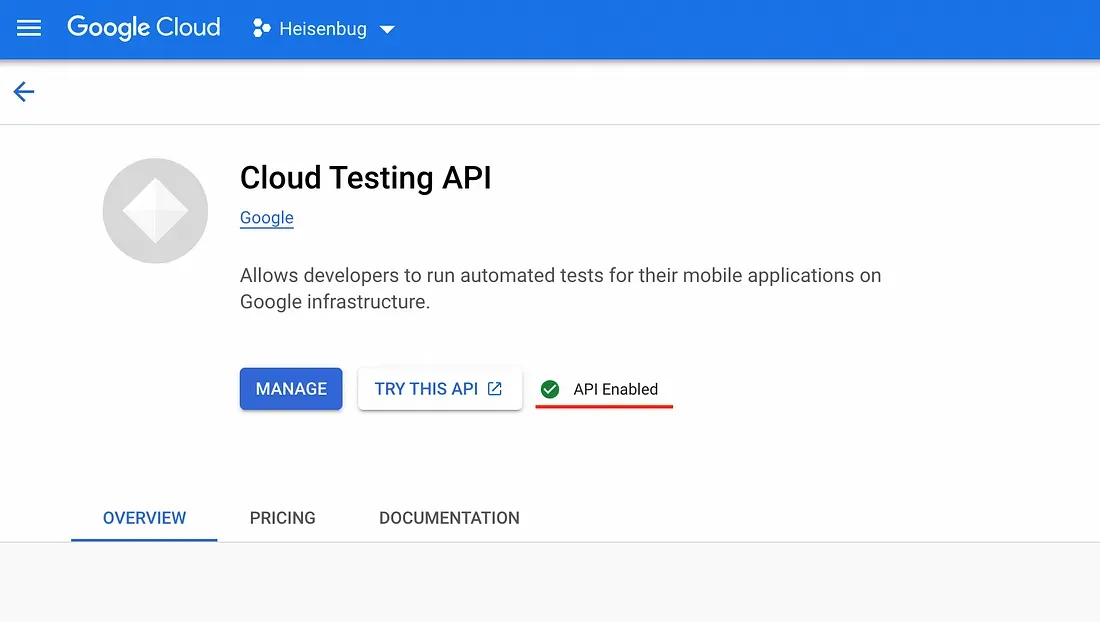

Enable “Cloud Testing API” (use Search to find):

Using the same approach, please enable “Cloud Tool Results API” and “Cloud Functions API”.

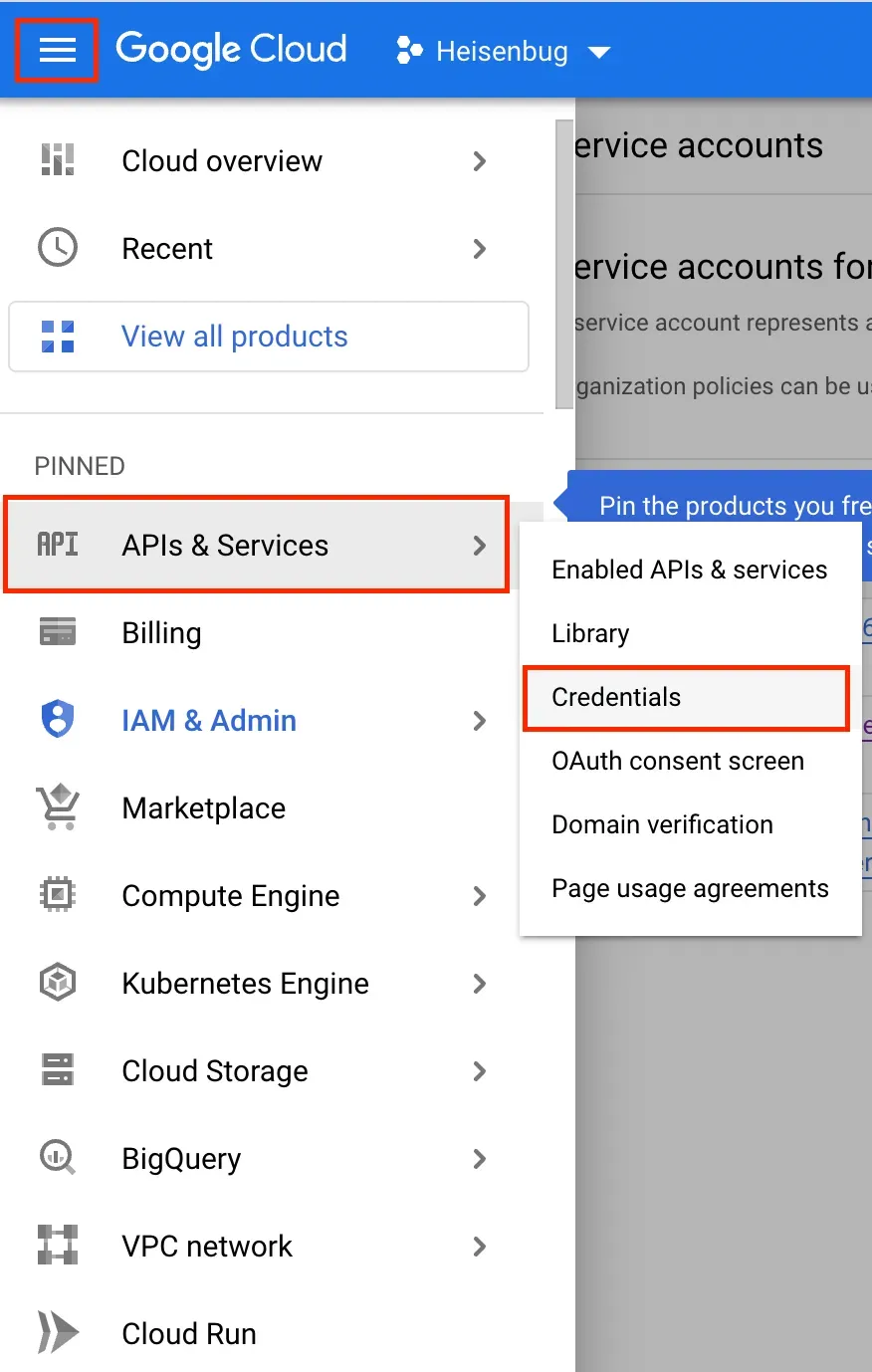

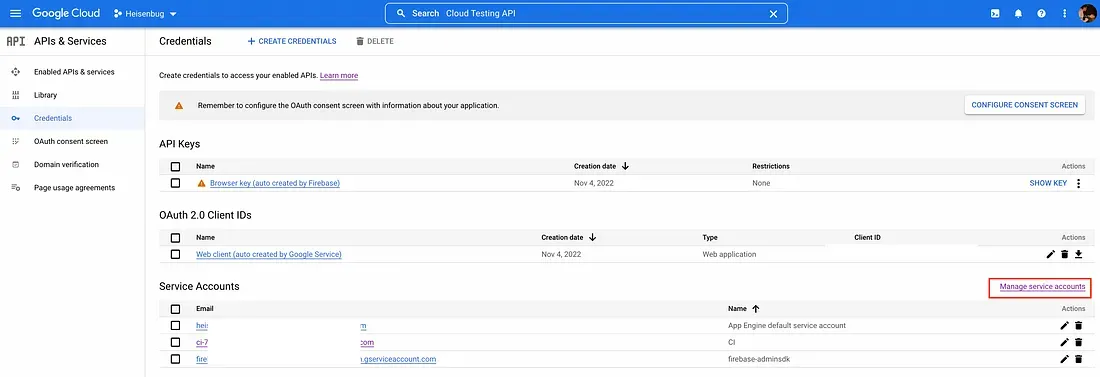

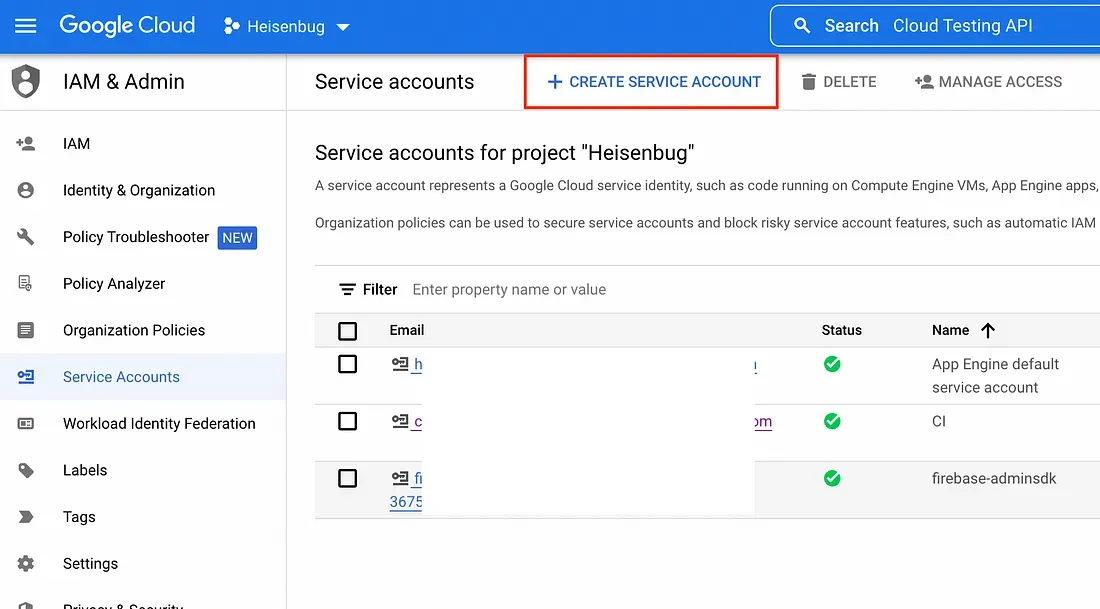

The next action is to create a “Service Account” and download the corresponding json:

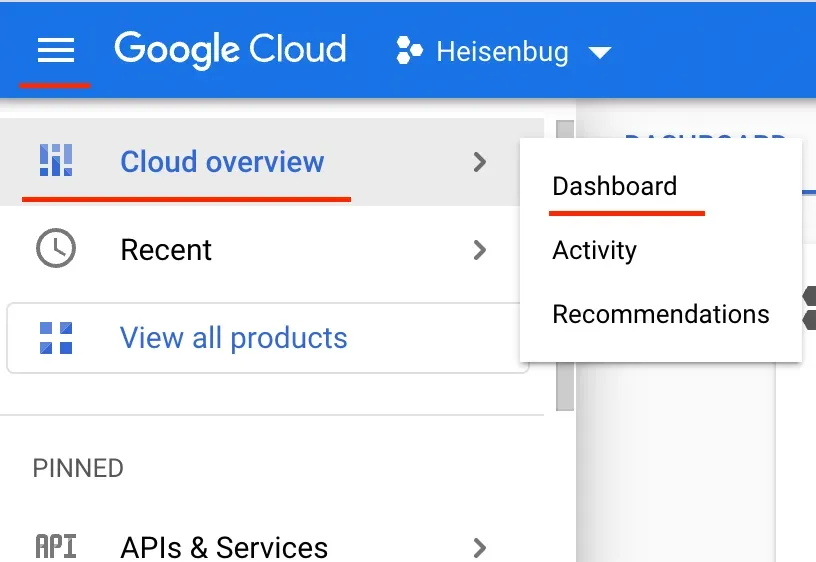

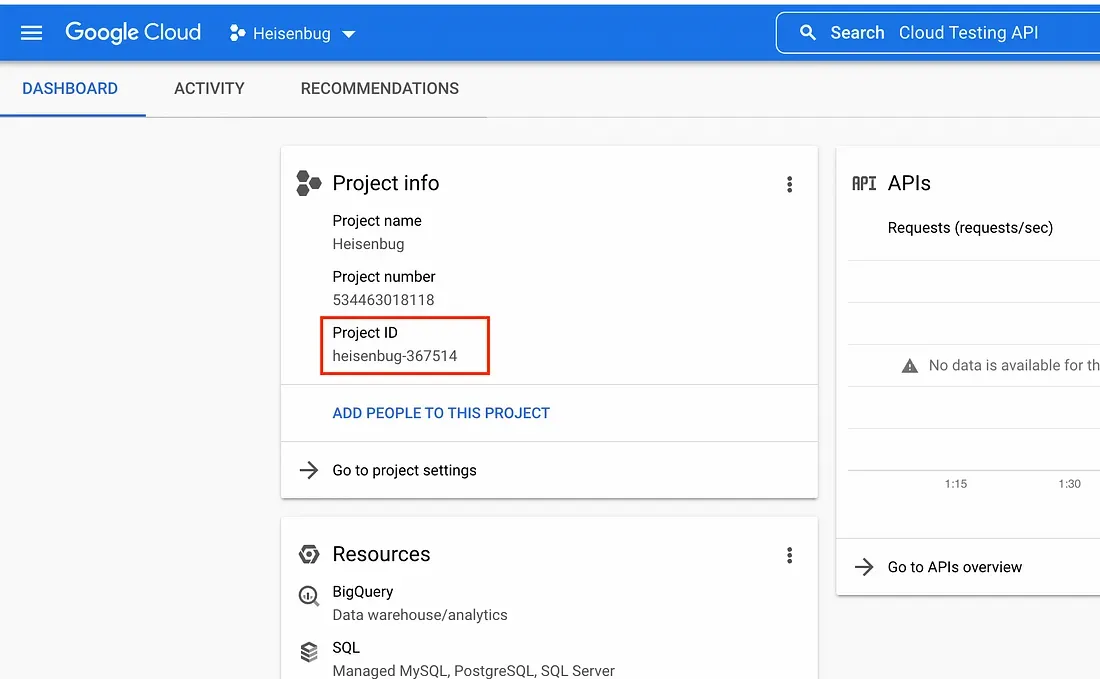

You should store the downloaded json file. Also, you will need a “Project ID”. Please have a look at how to obtain this parameter:

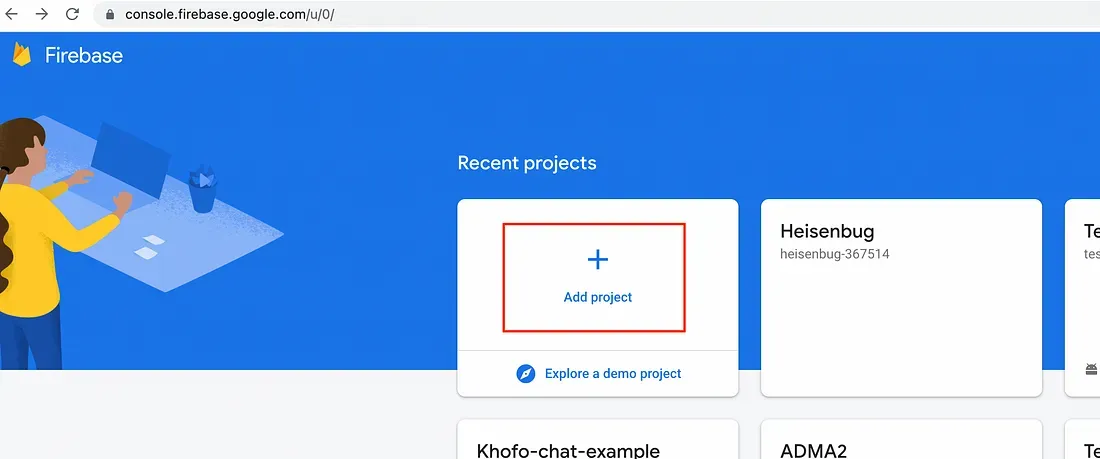

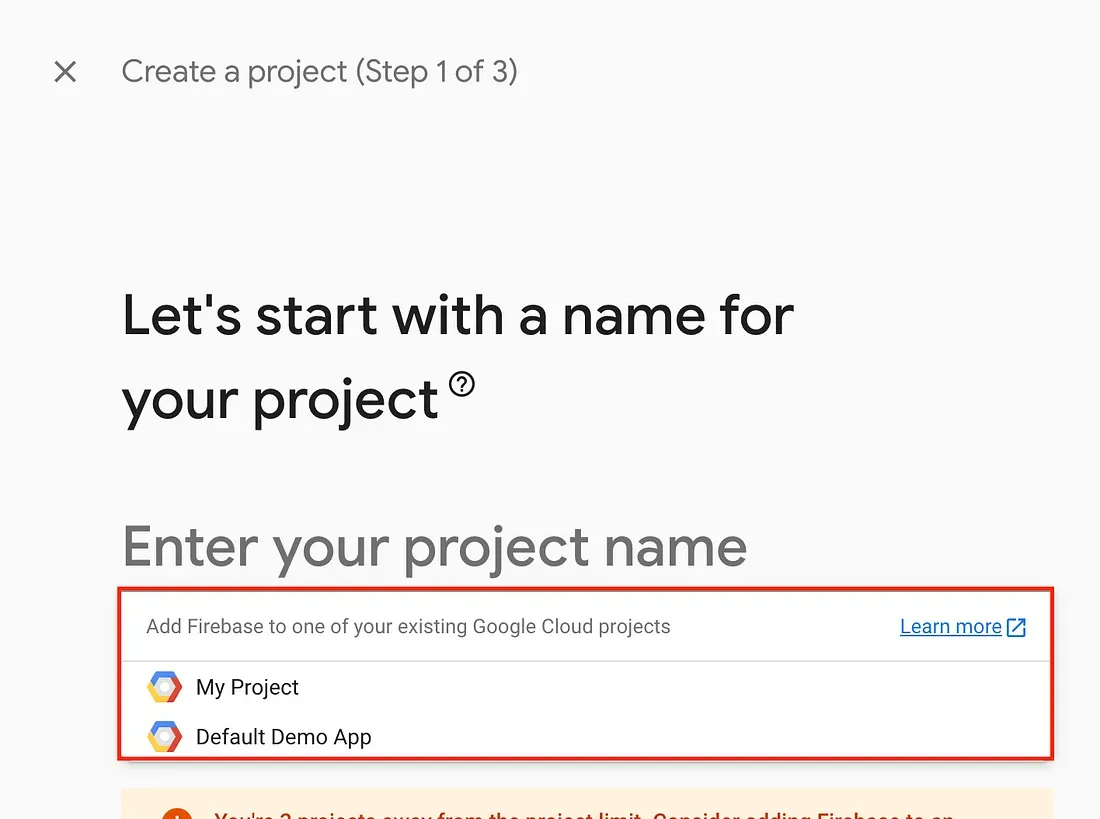

The last step is to attach the created Google Cloud project to Firebase Console:

Subscribe to get our latest news

Other articles

Why Mobile Testing Breaks Most Teams and How to Build It Right

How Tinder Transformed Mobile Test Automation on Android and iOS — Cutting Test Cycles from Hours to Minutes

How Peloton Cut Mobile Testing Time by 82% — And Gained Weeks in Their Release Cycle

I want to run any number of Android UI tests on each PR. Existing solutions. Part III